Building the right product takes a lot of measurements and data. One of the most well-known methods of measuring product is A/B testing.

Measuring product and doing things like A/B testing right, is hard.

Having a hard time understanding A/B testing or seeking to improve your A/B testing efforts? This A/B testing guide is for you.

What is A/B testing?

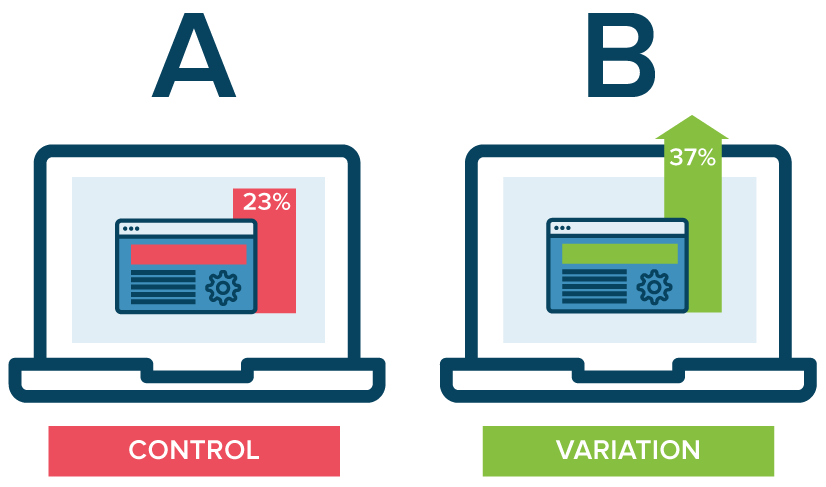

In short, A/B testing is about testing a hypothesis (= any enhancement that a user can view or interact with like text, components, colors, etc) compared to the current view. The current view is usually referred to as ‘the control’ and the test itself ‘the variant’.

Like testing a which header on a page would work best:

The goal of A/B testing

Most would say the goal of A/B testing is to improve conversion of a specific page or action. In most cases the part where the product makes its money.

Although its an important part of A/B testing, main problem with that statement is the lack of correlation: if you optimize a page it doesn’t automatically mean the next page or desired interaction in the user journey inherits the conversion uptake. It can actually be the opposite when you signal the wrong message and the expectation is not met further down the line.

That’s probably the biggest misconception and causes a lot of unexplained numbers.

The goal of A/B testing is to test any enhancement(s) on a view in correlation to its flow or funnel in order to improve the overall product experience for its user.

Determine your A/B testing strategy

In order to setup your A/B testing strategy, it is important to ask and answer these questions first:

1. Your mission (or goal) with A/B testing?

In most products, you just can’t A/B test everything. You have limited resources and traffic. The first step is to determine your actual mission/goal with A/B testing.

Example: We see a lot of cart abandonment on specific price points. We want to use A/B testing to test new hypotheses to improve our (adaptive) pricing display in our sales funnel to improve the customer gets the best price – product/upsells combination.

In this example, it’s very clear what challenge (cart abandonment) you address with A/B testing, the component and the specific funnel. It also addresses the most important thing: the value for the customer. It gives clarity to your product team and stakeholders.

You can always changes goal(s) along the way. It should reflect product challenges you’re having right now.

Remember: A/B testing isn’t to randomly test/optimize stuff. You need to identify real friction points in your product that need improvement. Without this clarity A/B testing will become a useless tool.

2. Front-end and/or backend effort?

Although you do tests that users can view doesn’t mean it’s on the same layer of your technology stack. Most A/B testing is done frontend-only as it requires minimum changes to your application code.

How to know if it is front-/backend without asking your developer: if you only want to test changes on existing components (text, colors, position, etc) it can be done by frontend-only. If you want to test a new component (like a new feature) that requires data from your application it currently isn’t handling, that would be a backend matter.

3. What kind of operational resources are required?

Now you know some of the basics you can determine the amount of resources you need (development, UX, data reporting). This is needed to determine the impact on your product operations.

Some portion of your sprint resources will be allocated to A/B testing and therefor can’t be allocated to core activities like application development.

A/B testing isn’t a free ride and resources cost revenue. Therefore the last question to answer is an obvious one.

4. Can we expect a certain outcome?

Till now you’ve defined spending time, effort and money to determine a certain goal setting. It’s only logical to define some degree of outcome. What outcome would you (and your stakeholders) be satisfied with? What outcome would really improve the product value for the user and have impact on the bottom line?

Congrats, it this point you’ve determined a baseline to do some focused A/B testing. Time to do some setup work!

How to manage your A/B testing pipeline

Your A/B testing pipeline is a separate backlog where you store and prioritize your A/B tests. Managing such a pipeline takes a few steps to get some expected results.

Accepting an A/B test into your pipeline

The first step of a good A/B testing pipeline is the acceptance criteria for a test story. You now have a well defined A/B testing mission. You should set the bar for the quality of A/B testing proposals. Communicating acceptance requirements to your stakeholders upfront is essential.

Minimum acceptance criteria for an A/B test:

- Hypothesis is this an experiment which resonates with our defined mission?

- Definition is it well described? Precise instead of vague words.

- Implementation what is needed to deploy this test? Know how resource heavy it is.

- Possible (user value) Outcome what do we expect as outcome? How will any outcome of this test help product value towards users.

If one of the above isn’t answered sufficiently. It shouldn’t be accepted into your pipeline.

Determining your pipeline speed

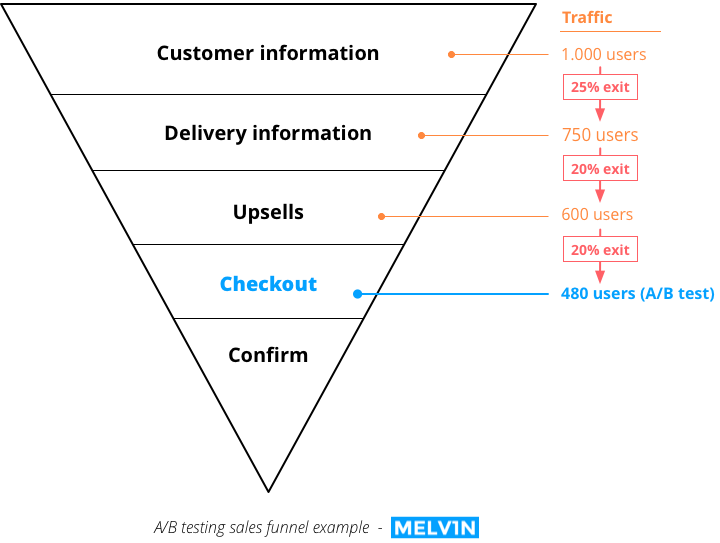

The time it takes to determine an A/B test is variable to the funnel depth of your test. Not every pages gets the same amount of traffic.

Example: In our efforts to optimize cart abandonment, we deploy an A/B test to see if the checkout conversion will grow once we add PayPal as new payment option.

The funnel would look like this:

Users exit any real-world funnel in between pages for a range of reasons. The image above paints a clear picture. If I would do an A/B test on top page of the funnel (Customer information) I have more than twice the traffic as on the checkout page.

You need to be aware of this and consider this in your pipeline. You can’t accept too much A/B testing once you don’t have those traffic resources to generate the results in an expected timeframe.

The lower level you’re testing in your funnel, the less traffic you have (= more time 1 A/B test takes to generate results).

Stakeholder management on your pipeline

The most important part of your pipeline is your stakeholder management. All the above helps you determine your pipeline, how much can be done and will set your prioritizing strategy.

Here is the most important part for stakeholder management…

Being able to say NO!

There is a point where A/B testing makes no sense or your knowledge clearly indicates that a suggested test will not do any good.

If a suggested A/B test offers no gain, avoid the pain. Say NO.

Implement A/B testing into product operations

Great, you’ve dropped your A/B test into the sea of traffic. Now you wait for the numbers to pass the critical mass needed. Most tools will take care of this themselves. It’s time to check the product operational side of your testing efforts.

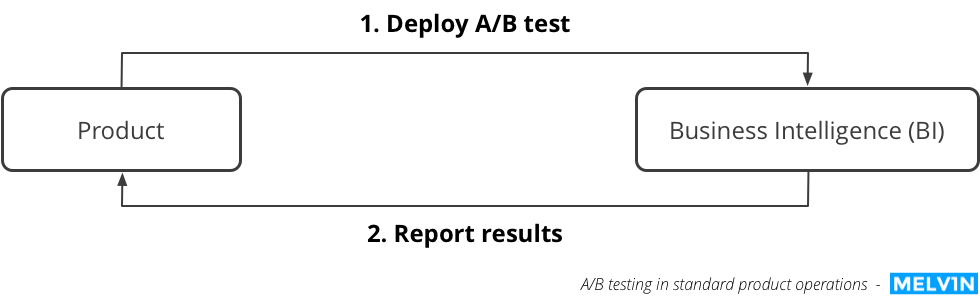

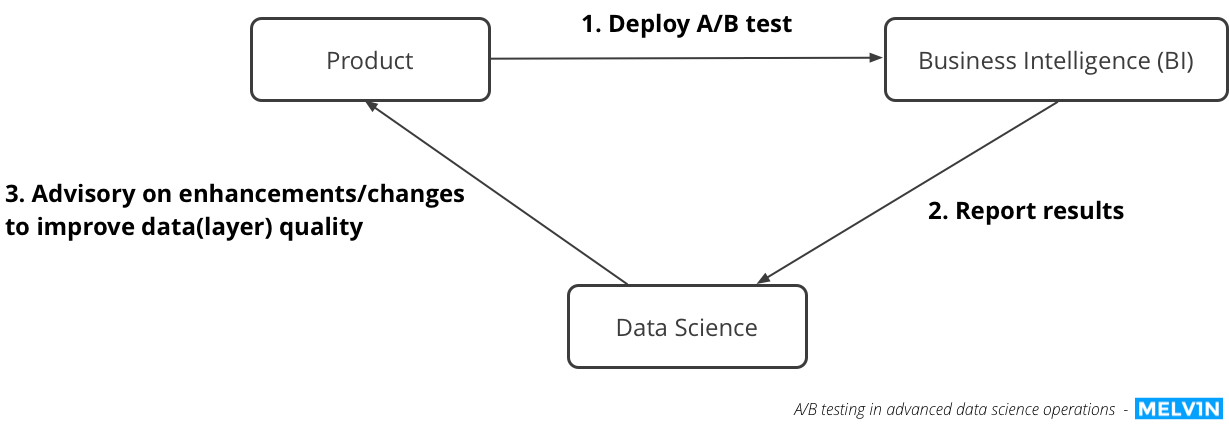

In most product operations you can keep the feedback loop straight forward. The only two departments needed are Product and Business Intelligence (BI).

BI is mainly responsible for two things in this operational cycle: making sure the data of the results are correct and identifying the correlated impact on the funnel.

In product operations with more advanced data science I prefer to separate BI from Data Science. This is because BI is more into supporting execution (reports, short term decision-effect) and data science more experimental by nature (data experiments, model training, long term decision-effect).

Data science should have partial influence (or at least an advisory role) on Product to steer data input. The quality of data science depends on basics like clearly defined data input, data validation (on application layer) and data sanitization.

Without ensuring data quality or standards, there is no data science, no machine learning, etc.

You need to understand 1 important thing here….

If you give a machine crappy data, it will make crappy decisions.

You can put that one-liner on your office wall to remind everyone it starts with the basics of data, not with fancy tooling or Powerpoint presentations about Machine learning.

A/B testing deployment

Warning: some parts here may sound like kung-fu tech. If you don’t have a tech background, don’t feel scared. Read it a few times and you’ll understand the gist of it.

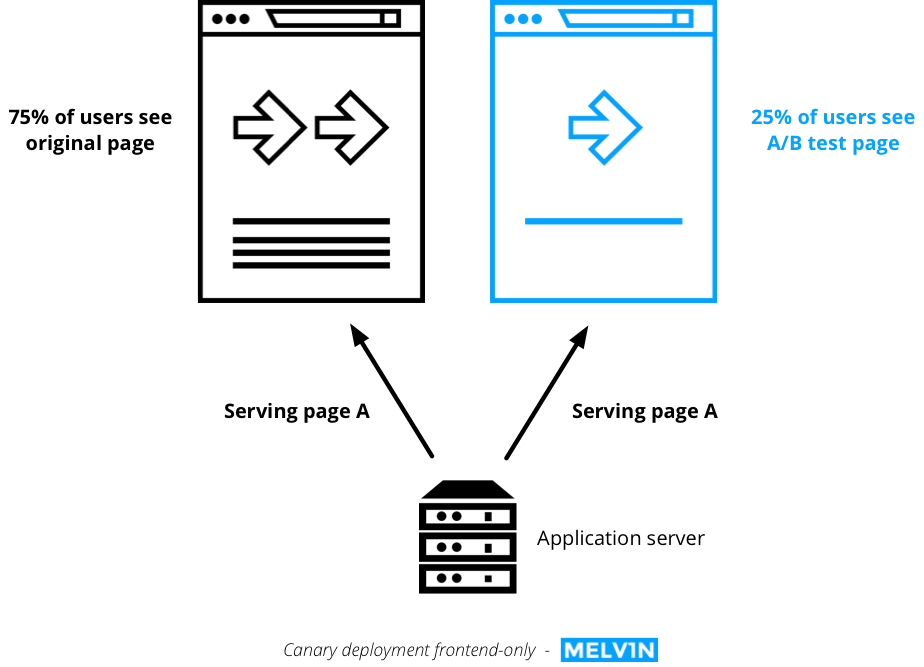

All methods are based on canary deployments. Canary deployment means that a release is only deployed to a subset of users first before rolling out to all users (once it meets expectations).

For the examples below I use 25% traffic to the test variant. If you don’t have a high traffic site, I would recommend a 50% split to speed up the process of generating results.

Canary deployment on frontend-only

This will help you test page elements through something called DOM manipulation. It means that the original page is served by the server to the user but some elements are transformed into another element (= your test variant) once it loads in the browser via a script. You’ll either need a frontend developer to make those scripts or A/B testing tool that will generate them for you.

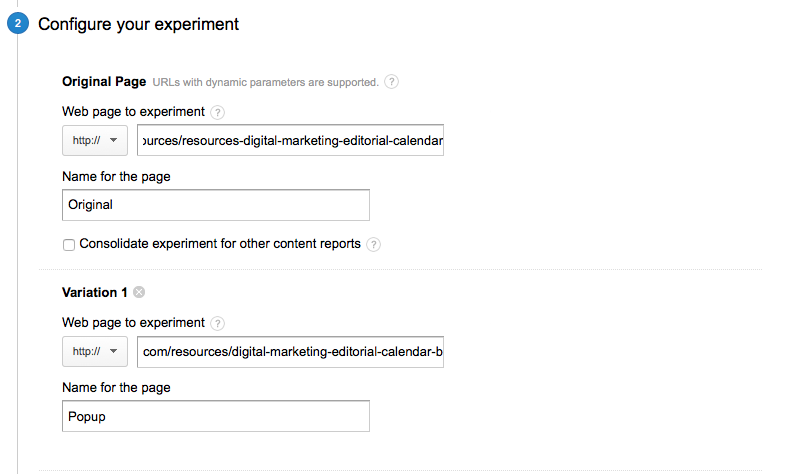

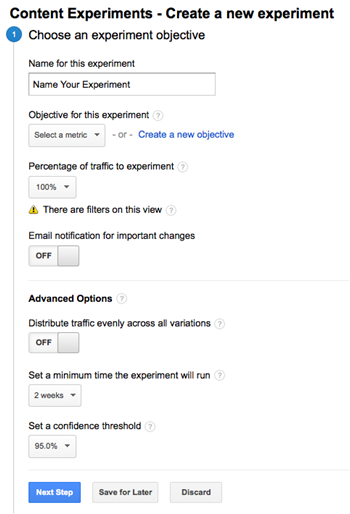

I prefer to use Google Experiments as most companies use Google Analytics. Both integrate with each other and prevents needing another tool.

I also use Google Tag Manager (GTM) for this. The main reason is that this implementation prevents you from bothering your developers to implement scripts separately every time you want to do an A/B test. It does injects scripts to any page automatically. You can deploy an A/B test with GTM any time you want. It keeps all scripts in one place and manageable.

Just follow a few simple steps:

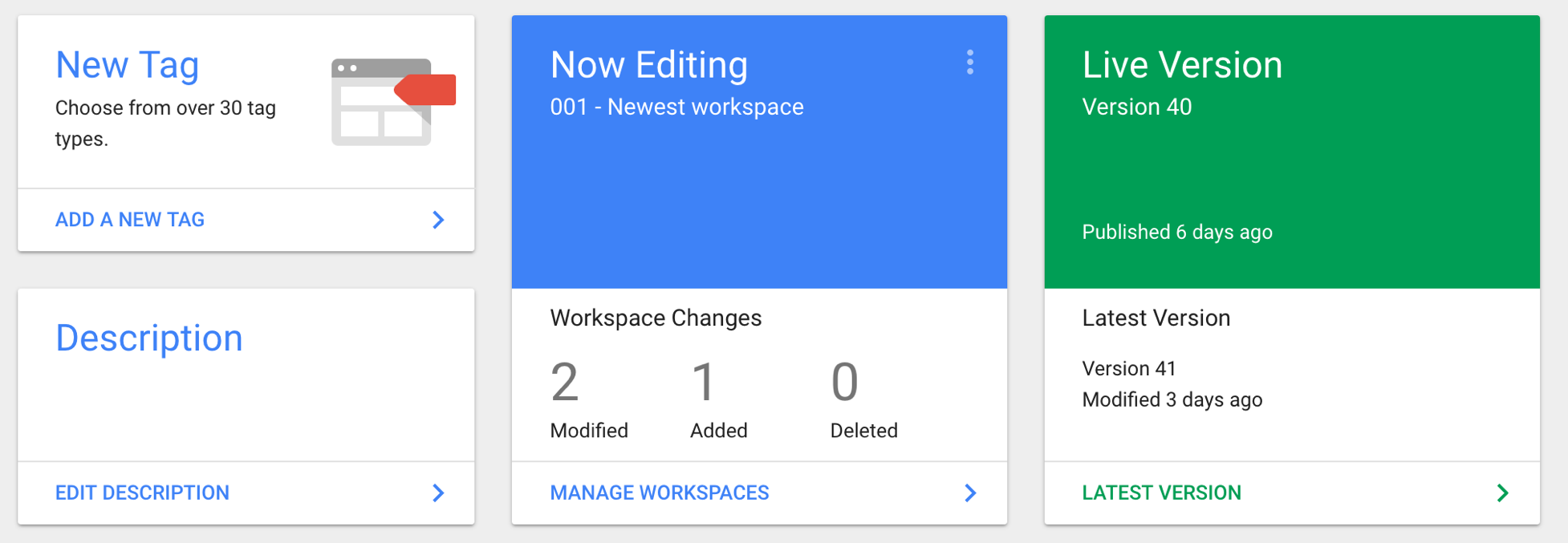

1. Create an experiment

In Google Experiments you create a new one. Set an objective and percentage to the test variant. If you like a certain degree of data, you can set a custom ‘minimum time of experiment’.

2. Set original/variant

Next you set the original url and the variant url.

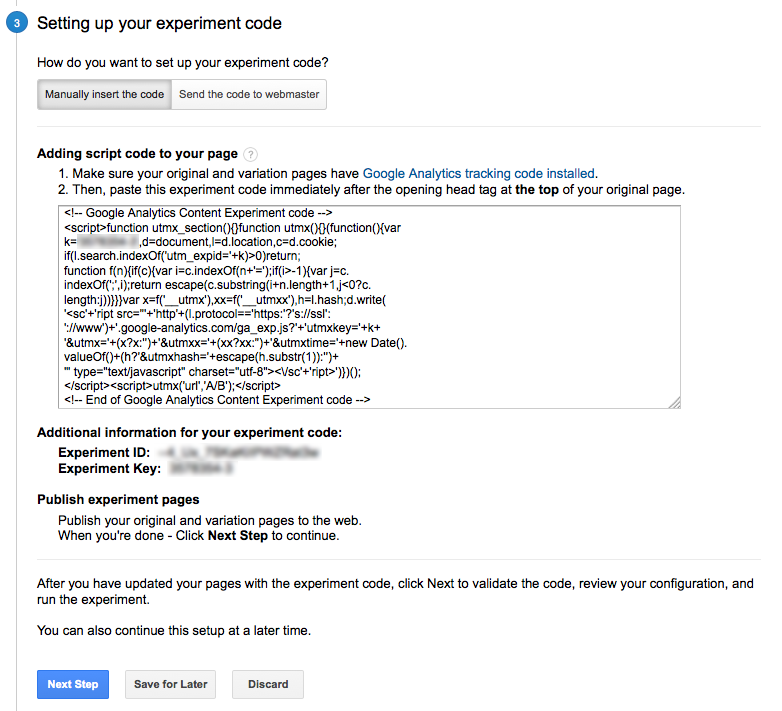

3. Copy the script code

At the end, it will spit out a piece of script code. Copy it to use in GTM.

3. Deploy a new GTM container

Add the code as New Tag and deploy a new version of the GTM container. Make sure the triggers are correct and your developer tests the new script(s) via debug mode before deploying (otherwise you can hit trouble in your production environment).

Deploy and enjoy! GTM will start injecting the new script(s) within a few minutes after deploying without touching any application code.

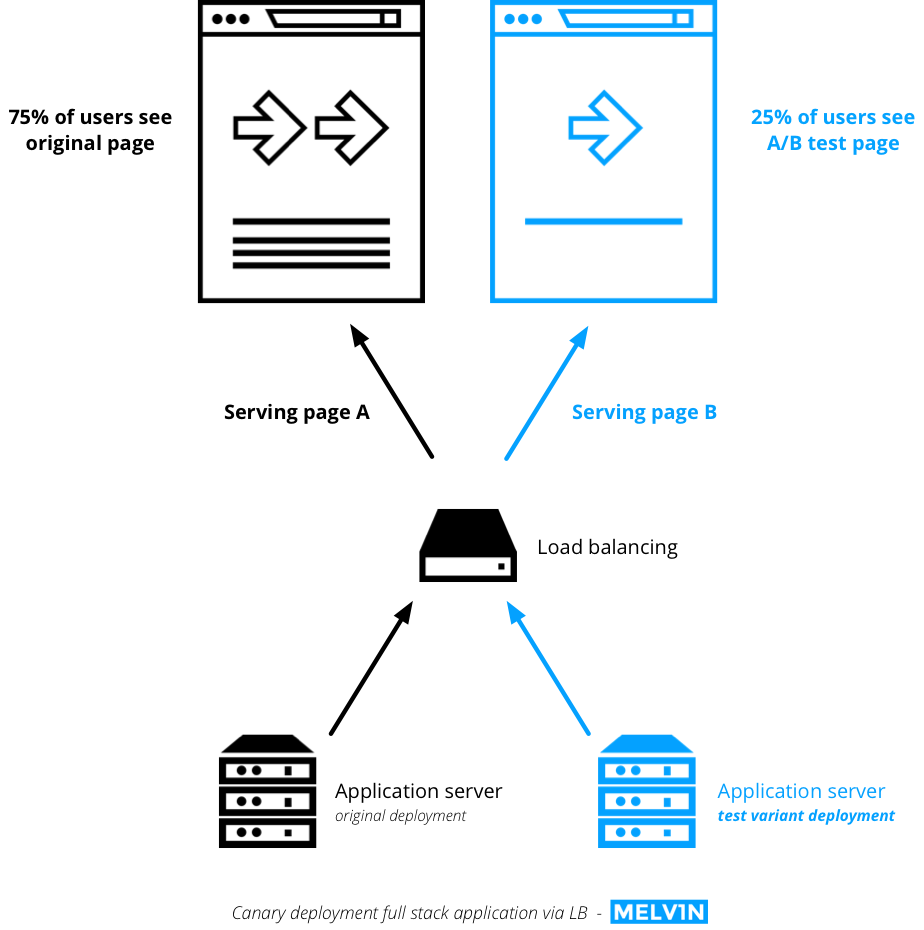

Canary deployment on (full stack) application via load-balancing

As I said before, testing things like new features requires backend work and deployment. In most cases the application that serves the site is run on a server which is load-balanced. This means there is a layer in between called a load balancer that directs traffic to manage the load over several identical webservers and failover when needed.

You can use the same principles from the frontend-only section. In this case a portion of the backend servers is deployed with the test variant and the load balancer redirects 25% of the traffic to the test variant servers.

Load balancer configuration

There are some technical details you need to get right to avoid problems. Here is some configuration help for your developers that they can use on their load balancing. The configuration is compatible with HaProxy, the most used load balancer software on the planet:

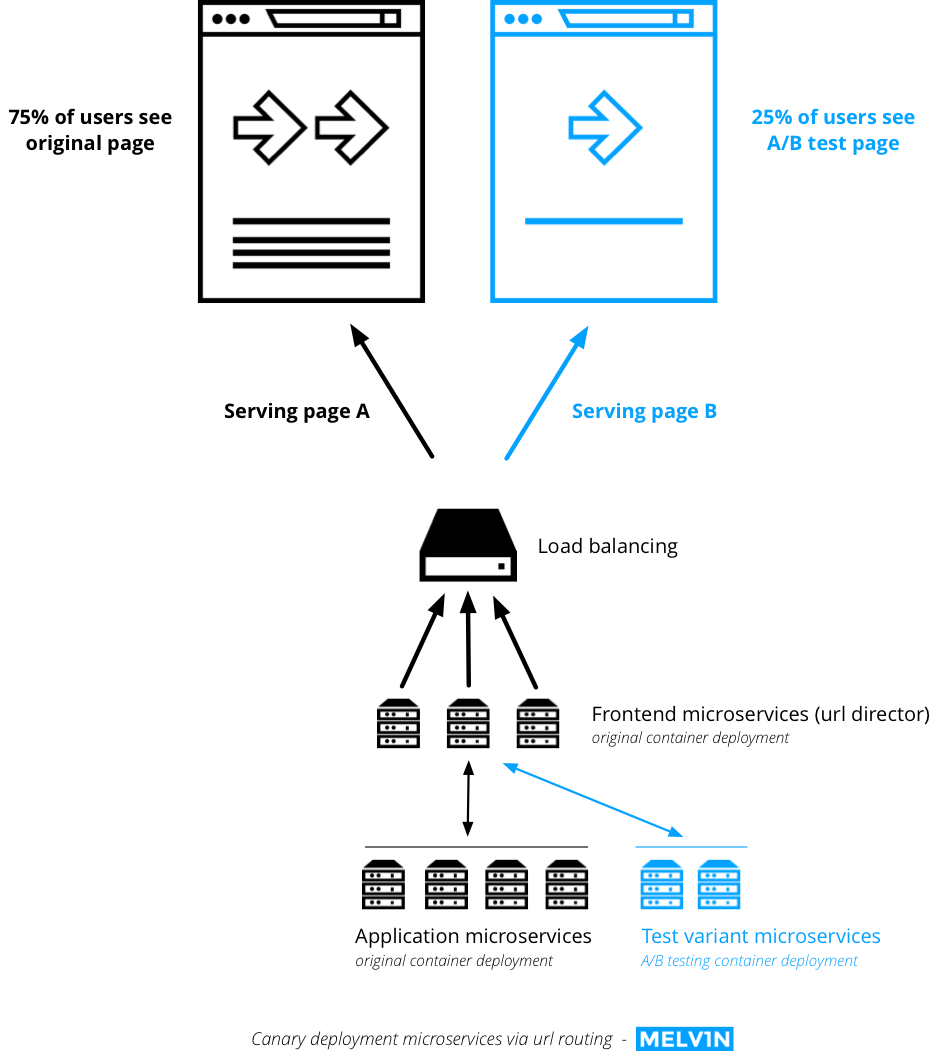

Canary deployment on microservices via url routing

If your stack is microservices based and support backend A/B testing, deploying an A/B test can be a whole other ballgame and is a bit more complex.

In a microservices architecture I would suggest to do it via url routing. Because it’s a containerized (see tech dictionary on containers) environment it easier to deploy separate microservices to support backend services needed for an A/B test and drop them when they’re not needed.

It means we can add a specific parameter to a page that tells the microservices that handle requests to redirect internal requests to specific A/B testing microservices to get the specific components.

As a result of deploying separated containers you don’t need to adapt your current load balancing. Make sure the original header request passes through. This way the microservice that handles frontend requests knows how to direct the request.

Some for proper A/B testing

Separate operations for A/B testing preferably in a separate backlog and deployments that are not in parallel with your application deployments. If you deploy parallel to your application deployments, you’re wasting valuable time and traffic you’ll need to reach for results to happen.

Don’t focus solely on ‘winners’ only focusing on which variant wins is not what A/B testing is about. It’s about understanding why specific hypotheses do or don’t work. The story behind data is more important than the data itself.

My personal view: Tests without real conversion uptake (lower than 3%) should be invalidated. This forces focus on real improvements instead of scraping for minor breadcrumbs (with no impact on your NPS).

Give a team member A/B testing ownership you as a product manager barely have time to go to such in-depth levels of data. You need someone to own the measurements/data-control cycle ensuring data quality and delivery of testing results. No proper A/B testing cycle = no proper decision making.

Some extra tips (BONUS)

Choose tool(s) wisely use any A/B testing tool that suits your needs. Make sure that results correlate with your own dataset measurements. Don’t blindly trust numbers you can’t double check.

Don’t forget your (SEO) indexing make sure to exclude indexing (temporary) pages or subdomains you solely use to run A/B testing on. Google will punish your ranking for giving pages that look duplicate. Rookie mistake you don’t want to make.

Sell A/B testing on managed expectations don’t sell unicorn magic like ‘site will get more traffic’ and other vanity metric BS. That’s not real stakeholder management. This will backfire 100% later on.

Like this A/B testing guide? Do share this with your colleagues or business friends.

1 comments On The ultimate A/B testing guide for product managers

This is good stuff. I have a couple questions:

Problem: We have a Prod/Dev/QA pipeline (for lack of a better word) and ideas that came from stakeholders get taken in and then ultimately, we just build them out and release them without A/B Testing.

Question: If we want to A/B Test these products FIRST, is it a good idea to have a completely separate A/B Testing pipeline or do we actually bring the idea into our regular pipeline, do the A/B Test there and then push it out if it’s successful??

It seems like at my current place we have two separate teams, but they’re not meshing properly and we’re still releasing products before we’re A/B Testing. Or we’re doing the A/B Test while an item is still having its technical requirements written out. So, it’s confusing.

Finally, how do I justify a team developing a product to A/B Test, testing it, and then it not performing well, and then ultimately it’s wasted code and dev time??